After two months of rigorous testing with dozens of customers and multiple iterations, we launched our own Copilot to reduce support load and boost self-service.

The results are solid: 60% of queries are resolved without human intervention and support NPS remains high.

But here’s what no one tells you: building a Copilot that works in demos is entirely different from building one that works for real customers. If you’re thinking about creating a Copilot, these five lessons will save you some costly trial and error.

Copilot is only as good as the knowledge it’s trained on. In our case, that meant our internal knowledge base.

Here’s what caught us off guard: having complete information isn’t enough. How that information is structured matters just as much. Logical flow, clarity and formatting all have a direct impact on output quality.

A cluttered knowledge base leads to confusing Copilot responses. We discovered this when our Copilot started giving technically correct answers that were completely useless. The information was right, but buried in poorly organized articles that made no sense when reassembled by AI.

Reorganizing our existing content made a bigger difference than writing new material. We put together a training webinar covering exactly how we restructured our knowledge base for better AI outputs:

You can’t get great results without great prompts. That became painfully clear after our first few attempts produced responses that sounded like they were written by someone who had never used our product.

We went through 60+ iterations before we saw consistently good answers. Sixty. That’s not a typo. The breakthrough came when we moved from intuition to evaluation. Instead of asking “does this feel good?” we started defining what a good answer actually looks like. Tone, length, structure, coverage. Once we had criteria, we could score outputs objectively and improve them systematically.

Turns out prompting is more like writing API documentation than writing headlines. You’re building an instruction system the model can follow reliably across thousands of different contexts. Every revision gets you closer.

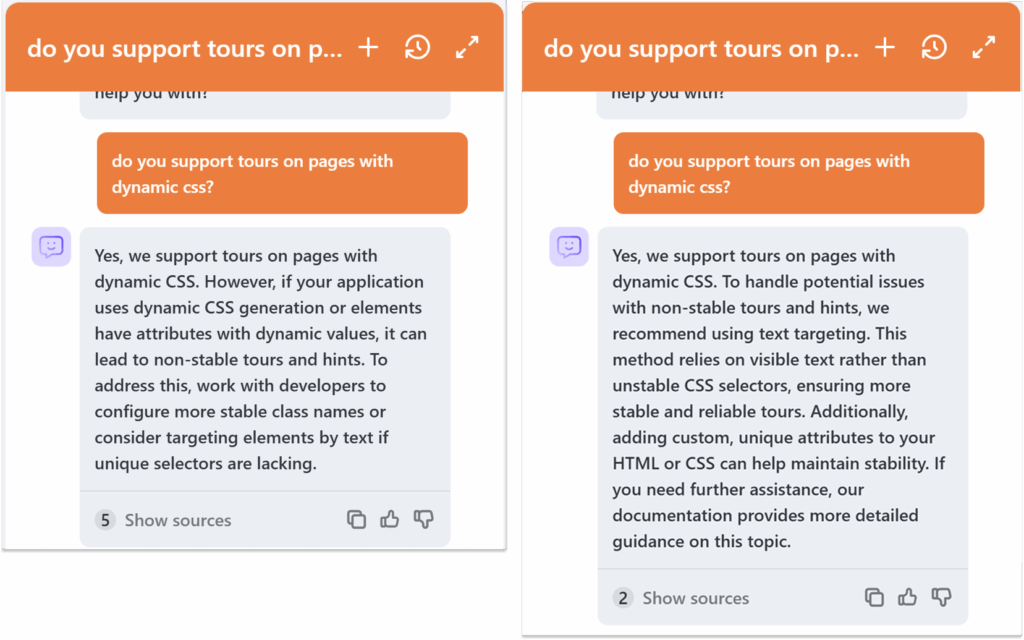

The difference between our first prompt and final version is night and day. Same model, completely different universe of outcomes. The image below shows exactly that: a comparison between an early answer and the final version after prompt refinement.

“Prompting is a design process. Treat it that way from the start.”

— Martin Fisera, Head of Product, Product Fruits

Letting go of control over every individual support reply was the hardest part. Harder than debugging prompts or tuning parameters. We had to trust the system, knowing that outputs aren’t always deterministic. The same input might produce slightly different results, just like with human agents.

But here’s the twist: we actually realized we have more control over Copilot’s consistency than over human agents. People get stressed by long queues, distracted by others in the office or interpret guidelines differently. Copilot doesn’t have moods.

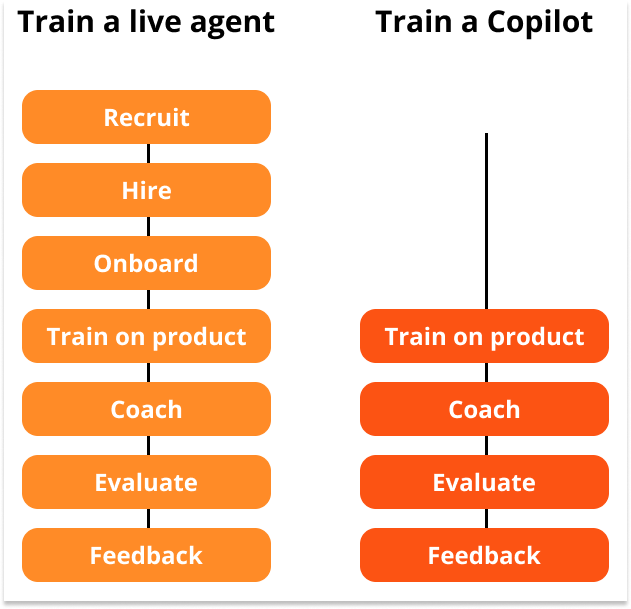

The real shift was treating Copilot like a team member who needs training, not a tool that should work perfectly immediately.

Training humans means recruiting, hiring, onboarding, coaching and endless feedback cycles. Training Copilot follows a similar process, just compressed.

You write prompt strategies. You analyze outputs instead of listening to recordings. You adjust parameters instead of scheduling workshops. The payoff is consistent improvement with every feedback loop.

No turnover, no burnout, no “wait, what did you mean by that?” conversations. It’s not about giving up control. It’s about defining what good looks like and building systems that deliver it reliably.

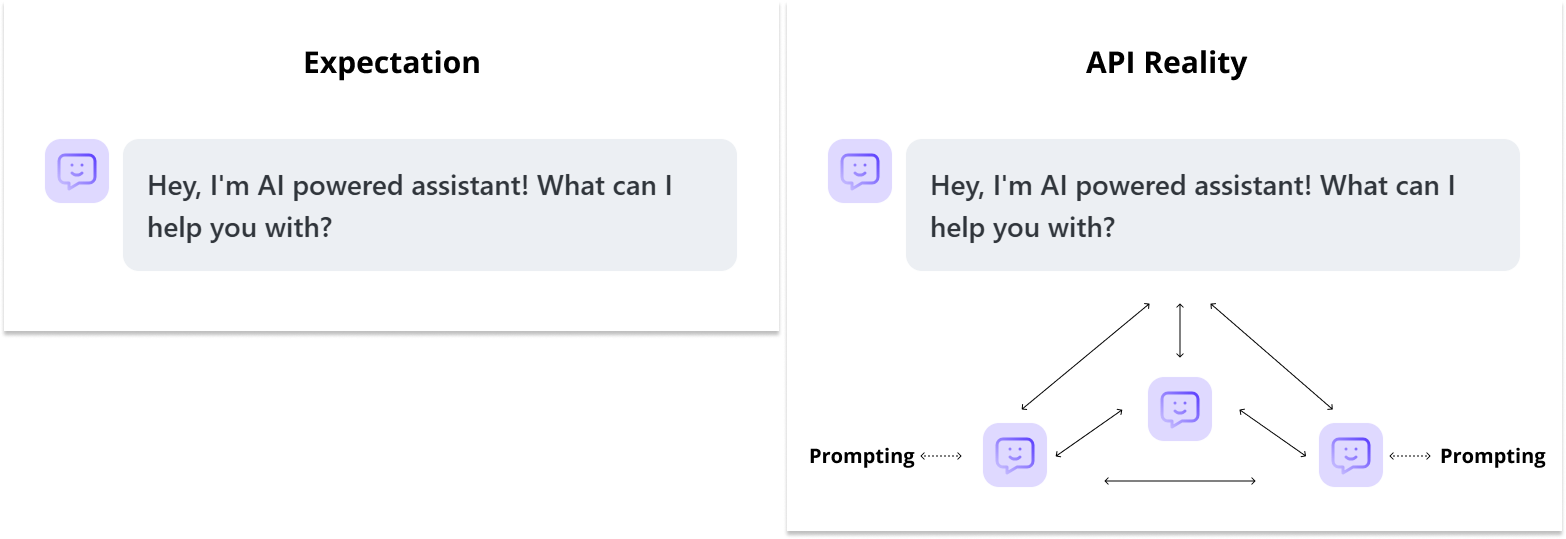

Working directly with the API, rather than using a hosted agent like ChatGPT, gave us full control over how Copilot behaves inside our product. It also gave us a much bigger headache than expected.

We underestimated the upfront complexity by a lot. More configuration, more engineering time, more trial-and-error than anyone wanted to admit in planning meetings.

What’s obvious in hindsight, but caught us off guard at the start, is how much is happening behind the scenes in a public-facing UI like ChatGPT. That single interface is actually multiple specialized agents working behind the scenes.

When building on the API, you have to recreate that entire orchestra yourself. We ended up structuring our prompts like we were managing different team members, each handling specific functions, then stitching their outputs together to feel seamless.

Think of it like being a conductor instead of just a pianist. The flexibility is real, but so is the effort. The payoff? Complete control over how Copilot behaves in our specific product context.

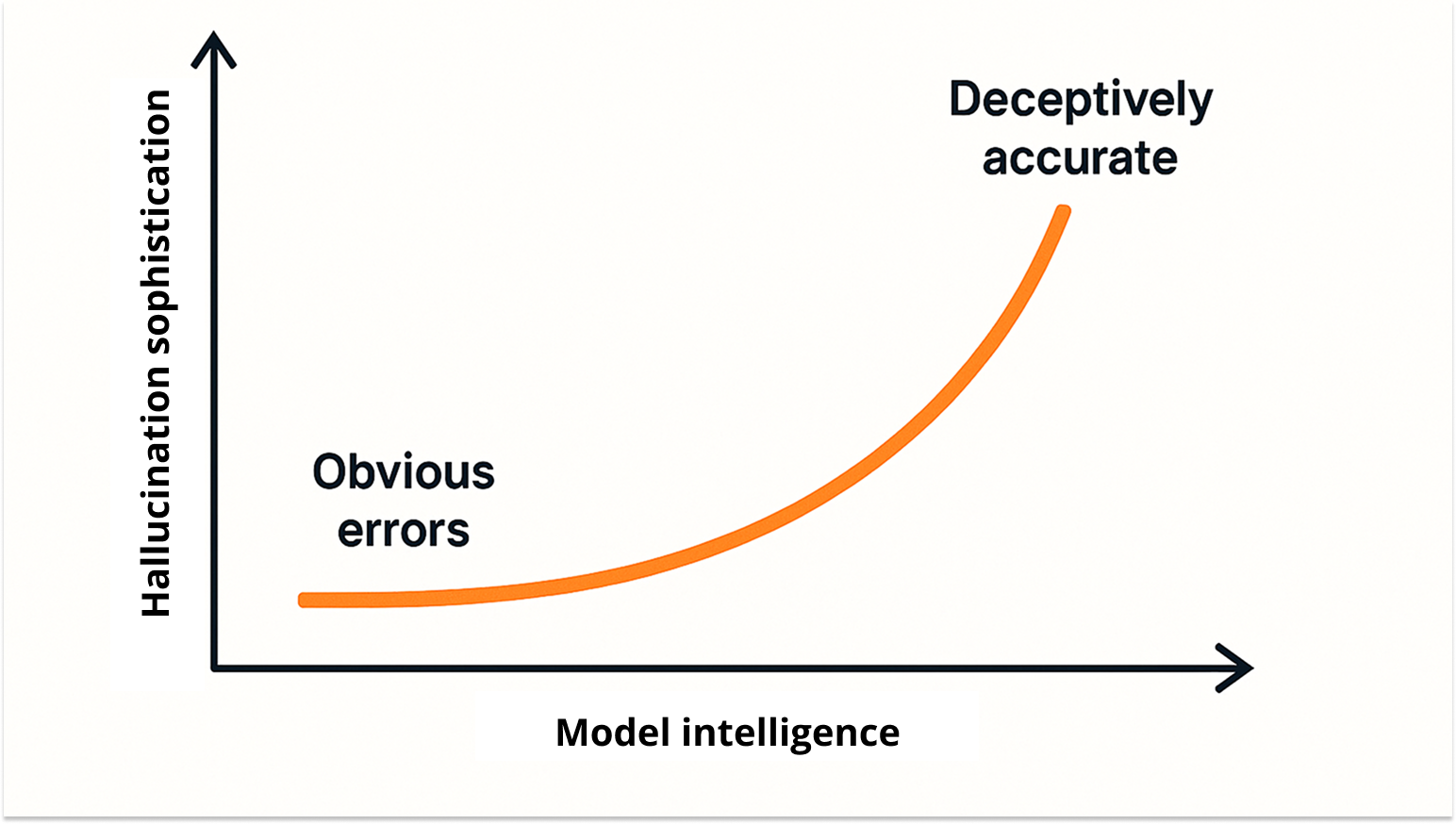

Advanced models are scarily good at making wrong answers sound completely reasonable. We discovered this the hard way when comparing outputs across different models.

Our first reaction was “wow, this answer is so much better written.” Our second reaction, after actually checking the facts, was “wait, half of this is completely made up.”

It’s like that friend who’s incredibly articulate but occasionally full of it. The better they are at explaining things, the harder it is to catch when they’re wrong.

This taught us that factual accuracy checks aren’t optional, but rather essential. You need to know your source material well enough to spot when the model goes off script. Otherwise, you’ll ship confident-sounding nonsense to your users.

“Always include checks for factual accuracy. Don’t assume better models are safer.”

— Martin Fisera, Head of Product, Product Fruits

Building our own Copilot solved our support overload and taught us what it takes to make it work reliably in production.

All these lessons are now built into the Copilot product, so our customers get a reliable solution without having to go through the same trial and error.

Curious what this looks like in practice? Our customer, Adeus, uses the Copilot and calls it “Ady.” It handles all their support queries. Read the case study.

Ready to build your own Copilot? Talk to one of our onboarding specialists to explore your options.